Imagine you are walking down the street, and you hear someone call your name from your right side. You turn your head in that direction. You can see the person who called out to you in front of you now. But when they call your name again, despite you having turned your head, it still sounds as though the sound is coming from your right. In fact, no matter what you do or how you turn, you always hear your name being called from your right, irrespective of where they are. This would break the rules and the reality of your world.

Or what if you were playing an open world fantasy video game, and your character is exploring the top of a hill for treasure. The game tells you that your quest objective, the buried treasure chest, will start making a pinging sound, that gets louder the closer you get to it. You can hear the pinging sound, but you can’t quite tell if it’s coming from in front of you, or behind you. As you walk, you can’t tell if it’s getting louder, softer, or staying at the same loudness. How are you supposed to find your objective then?

These ambiguities come about for the same reason – your brain is missing the subtle audio cues that come in real life when your head moves and changes its position relative to the sound sources.

Audio has become a vital component for the immersion and realism of the media we consume. With current technology, the experiences themselves are becoming more immersive and more realistic, but without head tracking, the immersion can break due to the brain being unable to resolve key ambiguities. If you want an expansive, engaging, and exciting experience, there is a limit to how far you can get without head tracking.

How We Localize Sound in the Real World

Sound is integral to our ability to perceive and interact with the environment around us. Our two ears play a vital role in allowing our brains to quickly and precisely identify the location and source of sounds. For example, sounds originating from a person’s left side will reach their left ear before their right ear, something referred to as Interaural Time Difference (ITD). There will also be a difference in the sound pressure level perceived by the two ears, due to the additional distance the sound would have to traverse to reach the right ear, something referred to as Interaural Level Difference (ILD).

The process of determining the location of a sound source, in direction and distance, is called sound localization. However, our hearing systems consist of not only our ears, but also our brain, head, shoulders etc. Sound waves interact with our head, shoulders, and the pinnae and ear canals of our ears, before they reach our eardrums. The interaction of sound waves with these various parts of our anatomy results in asymmetrical reflections which causes changes in the frequency spectrum of the sound source.

This process of how the ear receives sound from a point in space can be characterized by a Head Related Transfer Function (HRTF). An HRTF measures the changes to sound caused by the way sound scatters and reflects off a person’s head, shoulders and ears, before ultimately entering the ear canal. Each person has a unique HRTF, specific to the anatomy of their hearing system.

The brain uses these differences in time (ITD), level (ILD) and spectral content to localize sounds. Our brains continually process all this information in real time, to generate and populate a sound map of the environment around us. This includes not only to your left and right, but also in front of and behind you, as well as above and below you. This encompasses the full volume of space around you, including distance.

Creating Immersion with Audio

This brings us to spatial audio. In short, it is a broad umbrella term, used to describe an assortment of audio playback technologies that enable us to perceive and experience sound in three dimensions, all around us. For a more in-depth explanation, check out this blog post, or this on-demand webinar.

Consider a traditional 5.1 or 7.1 surround sound speaker setup in a home theater. This is a setup that many people own, and thoroughly enjoy. However, some of the downsides of this setup include:

i) needing good quality speakers which can be pretty expensive

ii) having a room that either sounds good enough acoustically or is acoustically treated such that these speakers can be enjoyed to the fullest (without bothering the neighbors!)

iii) needing the expertise to optimize the performance of these speakers in that room.

Another problem, with traditional headphones, headsets and earbuds, is that they tend to create the feeling that all sounds are originating within the head. Spatial Audio technology solves both by:

i) enabling us to create an immersive, engaging, and realistic soundscape, all while being easily applied to headphones, headsets and earbuds, thereby drastically reducing the barrier of entry and accessibility.

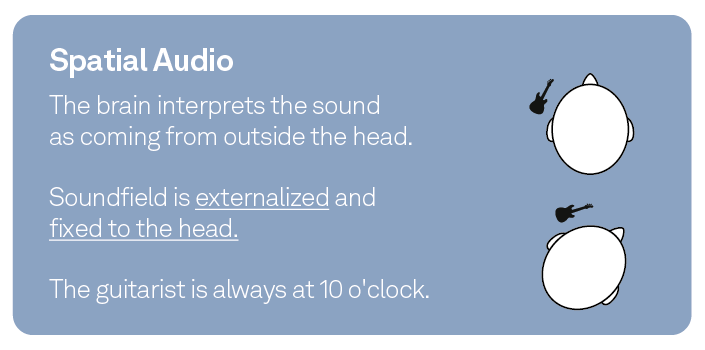

ii) enabling us to externalize the sound and create an immersive soundscape for the listener. This brings us to one of the fundamental problems with the form factor of conventional headphones, headsets, and earbuds – the sound field moves with you, which is not what happens when we listen to external speakers or interact with the physical world around us.

How Immersion Breaks Down

Let’s imagine you are walking on the sidewalk of a road, and a car honks to your left. If you rotate your head to look at the car, the honking car should now be in front of you. The sound field has stayed stationary relative to the external world, it is the orientation of your head that has changed. Now let’s imagine you are watching a movie with headphones on, and within the movie, a car honks to your left. If you rotate your head in that direction, the sound field is going to move with you, as it is anchored to the headphones and won’t change the sound based on your head motion. This contrasts with what happens in the real world and is one of the fundamental causes for the sound field collapsing within one’s head, thereby shattering immersion.

Figure 1: Spatial Audio w/o Head Tracking

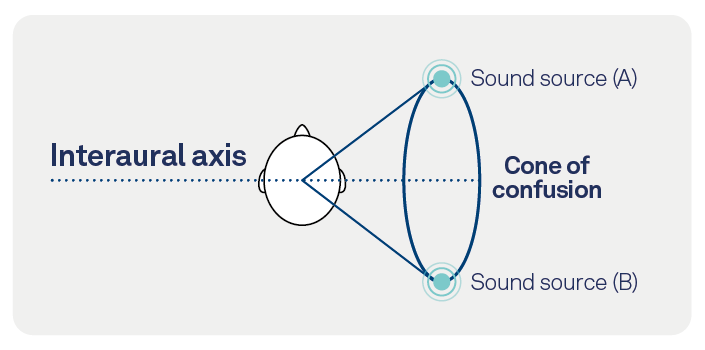

Another issue one might run into is Front Back Confusion. We humans aren’t very good at differentiating between identical sounds that are equidistant from each ear, even if one is in front of us, and one behind us, especially if it originates along the Cone of Confusion. The Cone of Confusion is an imaginary cone that extends outwards from the center of the head, such that any sound sources located on the locus of points that form the circular base of the cone, are all equidistant from the ears. See this diagram illustration:

Figure 2: Cone of Confusion

As you can see from this image, two sound sources, A and B, located on the Cone of Confusion, are both equidistant from the ears. Accordingly, despite one of them being to the front and one to the back, they produce identical ITD and ILD, and are thus very difficult to differentiate between and localize, leading to Front Back Confusion.

Get Real by Moving Your Head

Now… what if we were to add a Head Tracker into the equation? With the addition of a Head Tracker, the spatial processing can interpret the motion of your head relative to the virtual center of the sound field and adjust the ITD, ILD, and HRTF based on the head motion. This enables the sound field to stay anchored in space. As you turn your head towards a sound, you will hear it oriented to be in front of you. These precise responses to minute movements are a crucial factor in not only resolving Front Back Confusion but also in creating an immersive user experience.

Let’s imagine you are playing a highly immersive virtual reality First Person Shooter (FPS). You load into a level on an alien ship that is pitch black, with no light, and there are aliens spawning all around you. If you were to hear footsteps that happen to originate along the Cone of Confusion, they would be very difficult to localize. With a head tracker however, the small movements you make with your head and the information that provides to your brain in real-time, would go a very long way in resolving front back confusion, enabling you to accurately localize those alien footsteps.

![]()

Figure 3: Spatial Audio with Head Tracking

Similarly, we also have difficulties in gauging the elevation of sound sources without visual cues. It would be extremely difficult to accurately give you the impression that a source is elevated above you if you were using speakers in a surround sound configuration, or traditional headphones. Spatial audio technology makes it possible to create and render the necessary filters and cues to provide a sense of elevation. Add a head tracker into the mix, and once again, the micro movements of your head and the way the soundscape reacts to them will help you accurately pinpoint the location of the alien hiding in the ventilation shaft above you! Lo and behold, you have beaten the dreaded dark level of the alien ship. Well done!

Lackluster or Blockbuster?

Without head tracking, there is a hard limit to how realistic and immersive you can make your audio experience. It enables us to not only externalize the sound, preventing it from collapsing within our head but also accurately localize it in 3D space around us, resolving ambiguities such as front back confusion.

If you’re interested in this technology to enhance your hearable device, check out our RealSpace solution! RealSpace is a head-tracked, multichannel, binaural rendering engine for a truly immersive experience. RealSpace is capable of rendering mono, stereo, multichannel, or ambisonic audio content into spatialized binaural audio directly on TWS or audio headsets. It is available on a wide array of platforms, including PC/Laptops, mobile phones, VR/AR devices, and embedded DSP for a headset or TWS form factor. Based on accurate, physics-based environmental modeling, RealSpace can deliver a memorable and immersive audio experience.