The advent of Neural Processing Units (NPUs) has revolutionized the field of machine learning, enabling the efficient execution of complex mathematical computations required for deep learning tasks. By optimizing matrix multiplications and convolutions, NPUs have greatly enhanced the capabilities of AI models across various domains, from server farms to battery-operated devices.

The emergence of TinyML (Tiny Machine Learning) has further pushed the boundaries of AI, focusing on implementing machine learning algorithms on resource-constrained embedded devices. TinyML aims to enable AI capabilities on billions of edge devices, allowing them to process data and make decisions locally and in real-time without relying on cloud connectivity or powerful computing resources.

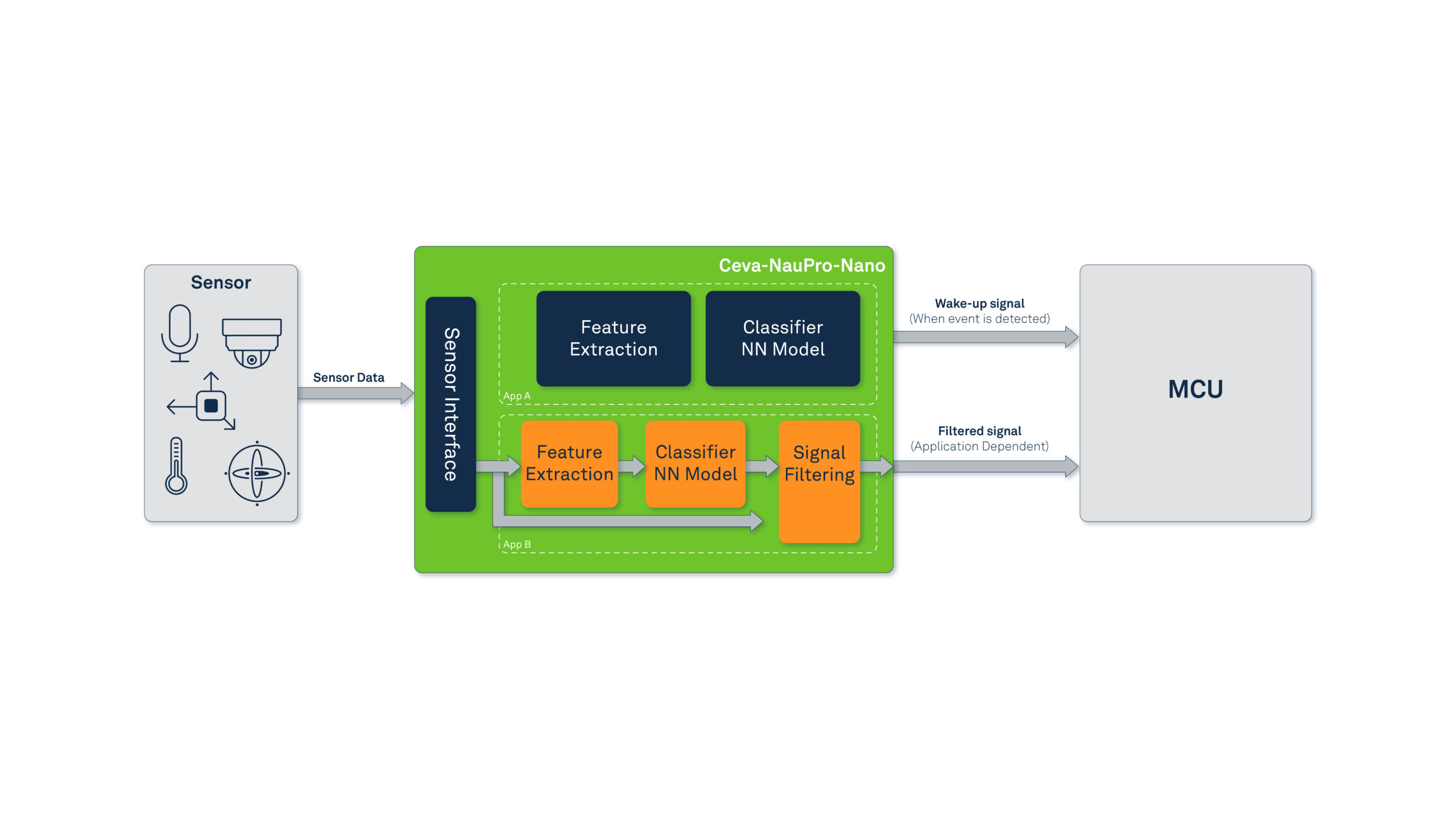

Building on the foundation of NPUs and the emerging field of TinyML, Ceva has introduced the groundbreaking Ceva-NeuPro –Nano. This compact and efficient NPU IP has been meticulously designed with TinyML applications in mind, offering an unparalleled balance between performance and power efficiency. Ceva-NeuPro-Nano’s unique architecture is optimized for running complete TinyML applications end-to-end, from data acquisition and feature extraction to model inferencing, making it an ideal self-sufficient solution for resource-constrained, battery-operated devices.

Source (Ceva)

Design Philosophy:

The vision for Ceva-NeuPro-Nano was shaped by a deep analysis of user perspectives, recognizing the need for a solution that is both powerful and user-friendly. The design philosophy was primarily guided by prioritizing software ease-of-use and addressing application-level challenges rather than just focusing on the neural network layer level. This approach ensures that Ceva-NeuPro-Nano can handle neural networks, control, and DSP workloads efficiently and seamlessly.

The primary objective was to create an NPU for embedded AI that delivers class-leading performance without compromising power efficiency. Ceva-NeuPro-Nano’s cutting-edge hardware design has been tailored to meet the low-power, high-efficiency requirements essential for TinyML applications, making it an ideal solution for resource-constrained edge devices.

Software First:

Ceva-NeuPro-Nano’s comprehensive software ecosystem supports the two major TinyML inference frameworks: TensorFlow Lite for Microcontrollers and MicroTVM. This ensures seamless integration into a wide range of TinyML applications. Unlike many other solutions, Ceva-NeuPro-Nano is not just an accelerator dependent on a host microcontroller unit (MCU); it is a fully programmable processor with exceptional Neural Network (NN) and Digital Signal Processing (DSP) capabilities, making it future-proof and adaptable to any future layer or operator.

In addition to the support for major TinyML frameworks, Ceva-NeuPro-Nano comes with a comprehensive NN library for cases where model handcrafting is required, as well as a DSP library that provides full DSP functionality. These comprehensive libraries boost Ceva-NeuPro-Nano’s adaptability and versatility, enabling developers to effortlessly harness it to their unique application requirements.

Innovative Architecture:

The Ceva-NeuPro-Nano architecture introduces several innovative features that address key pain points in TinyML applications. It offers direct processing of compressed model weights, eliminating the need for memory-intensive decompression, making it ideal for memory-constrained TinyML devices. The advanced data cache system simplifies hardware management and improves overall efficiency, removing the complexity of Direct Memory Access (DMA) scheduling.

The hardware architecture of Ceva-NeuPro-Nano is designed to handle non-linear activations, enabling it to support a diverse range of machine learning models. It also integrates cutting-edge power-saving technologies to ensure high efficiency, making it well-suited for power-sensitive edge devices. With hardware-level support for both symmetric and asymmetric quantization schemes, as well as native 4-bit data type support, Ceva-NeuPro-Nano can accommodate a wide variety of TensorFlow models, further expanding its adaptability and enabling more efficient data processing and storage.

The MAC Wars

Many NPU manufacturers boast about the increasing number of MAC (Multiply-Accumulate) units in their designs, implying that more MACs equate to better performance. However, at Ceva, we have taken a different approach with Ceva-NeuPro-Nano, focusing on MAC utilization rather than sheer numbers.

We recognize that having a large number of MAC units does not necessarily translate to better performance if those units cannot be efficiently utilized. In fact, a higher MAC count often leads to increased power consumption without delivering proportional performance gains. Ceva-NeuPro-Nano NPU comes in two variants: the Ceva-NPN32 with 32 8×8 MACs and the Ceva-NPN64 with 64 8×8 MACs. Through extensive experiments, we have demonstrated that our 32-MAC variant can display to other solutions with 128 MACs. This remarkable efficiency is achieved through higher MAC utilization, made possible by our innovative design and architecture.

By prioritizing MAC utilization over raw numbers, Ceva-NeuPro-Nano delivers impressive performance while maintaining lower power consumption. This approach aligns perfectly with the requirements of TinyML applications, where power efficiency is paramount. Our focus on efficiency enables Ceva-NeuPro-Nano to outperform competitors with higher MAC counts, proving that intelligent design and optimization are more important than engaging in MAC wars.

Real-World Use Cases:

In our rigorous testing and analysis, we compared the execution of various TinyML models on NeuPro-Nano with alternative solutions. The results highlight the incredible value of NeuPro Nano. It boasts a 45% smaller area, offers 3x better energy efficiency, consumes up to 80% less memory, and provides up to a 10x improvement for TinyML networks.

We achieved these outstanding performance and efficiency metrics by focusing on real-world TinyML use cases spread across the three main pillars (the 3 V’s): Vision, Voice, Vibration:

- For the Vision Pillar, we realized the important role lite computer vision tasks such as face detection, landmark detection, object detection and image classification play in allowing wearable and IoT devices interact and understand their environment. Robust, industry proven, neural network designs such as EfficientNet, MobileNet, Squeezenet, and tiny YOLO that handle major lite computer vision tasks are a few examples of the models we took into consideration. This ensures that Ceva-NeuPro-Nano can tackle CNNs, depth-wise convolutions, and other layers gracefully and efficiently

- For the Vibration Pillar, we built on Ceva’s unique experience with IMUs hardware, software and application development for tasks such as human activity recognition and anomaly detection playing key roles in wearable technology and industrial application respectively.

- For the Voice Pillar, the next step in human-machine-interaction, we built on our own extensive experience in voice sensing application development (for keyword spotting, noise reduction, and voice recognition) and on our intimate knowledge of the body of work in the field. Considering networks of diverse designs from RNNs and CNNs to tiny transformers, ensured the NeuPro Nano design can master them all.

In consolidating the 3 V’s, we recognized the importance of an often-neglected part of the NN-based application – feature extraction. This motivated the integration of strong control and DSP capabilities into the Ceva-NeuPro-Nano design.

Conclusion:

The Ceva-NeuPro-Nano unique architecture, efficient MAC utilization, and comprehensive software ecosystem make it a versatile and powerful solution. Its design philosophy, focusing on real-world use cases and application-level challenges, ensures it can handle a wide range of tasks efficiently and seamlessly. With its groundbreaking performance, efficiency, and adaptability, Ceva-NeuPro-Nano is set to revolutionize the field of TinyML, bringing the power of machine learning to billions of resource-constrained devices.

Ceva-NeuPro-Nano joins the Ceva-NeuPro family of NPUs and expands the scope of edge AI workloads that our customers can now address from TinyML application to massive generative AI models.